April 2020

Dear Reader,

If demographic benchmarks and trends are essential to your business and the research you conduct (things like age, gender, race, ethnicity, and region) then this newsletter has information you absolutely need to know.

Why? Because the U.S. Census Bureau continues to change how it collects and classifies demographic data. Right now the Bureau is engaged in its every-ten-years BIG census that will survey every household in the nation. It will have a lasting impact on all of us for at least the next ten years.

Our feature article on How to Ask Race & Ethnicity on a Survey highlights what the Census Bureau is doing, and how it will affect your business and the research you conduct.

Other items of interest in this newsletter include:

- Why Your CEO Loves NPS: It Is Never Audited, and It Never Declines

- Why You Should Be Using Conjoint Analysis

- Avoid Numeric Response Scales in Surveys (Here’s Why)

- Forget the Math: A Conceptual Guide for Good Sampling

- AI (Artificial Intelligence) Just Rescued Our Survey!

- New Data on COVID-19 Highlights Crucial Role of Research

- How to Trap Survey Trolls: Ask Them for a Story

- Five Best Practices for Keeping Your Data “Anonymous”

- If Truth Matters, Prove It with a Survey

- A Video Report Example with Animation and Insight

We are also delighted to share with you:… which showcases some of our recent work for the Alzheimer’s Association, a customer satisfaction survey highlighted in the latest issue of Quirk’s, and a masterclass for business students at the University of Illinois in Chicago.

As always, feel free to reach out with an inquiry or with questions you may have. We would be pleased to consult with you on your next research effort.

Happy spring,

The Versta Team

How to Ask Race & Ethnicity on a Survey

The most thoroughly tested and validated survey questions ever on the planet are probably the 2020 Census questions on race and ethnicity. Census Bureau researchers have spent tens of millions of dollars over the past decade interviewing hundreds of thousands of Americans about race.

As a result, the Census Bureau has implemented a few changes for the 2020 decennial census, one piece of which involves documenting the race and ethnicity of every person in the United States. Does it mean you should be replicating the Census questions in your own surveys?

Possibly yes, probably no.

To help you sort through the issues, this article describes recent changes to the 2020 Census and helps you assess how the new race and ethnicity questions will likely affect your own research and survey work in the future.

Right now the 2020 Census questions on race and ethnicity may well be the most thoroughly tested and validated survey questions ever on the planet.

Here is what we cover:

- Race and ethnicity are asked differently from years past. We’ll show you the old and the new, and explain why Census Bureau researchers had hoped for even bigger changes.

- The new questions are complex, just like the old ones, which some people find confusing. But survey respondents are resilient. We’ll provide a brief overview of how the questions were validated.

- You will have to incorporate the new questions into your work. There is no choice if you want to reference census data. But should it change your approach to asking about race and ethnicity in your own surveys? We’ll show you our recommended approach.

No, the Census Race & Ethnicity Questions Are Not That Confusing

We’ll start with the second item noted above, because it was a complaint by an industry colleague that prompted us to begin looking at the issue more closely for this newsletter.

Here is what this colleague posted on a market research discussion forum:

The race question is very odd. I don’t think any of us in the research community (quant or qual) would have asked the question this way. The question groupings and listed categories are very odd. And the required open-end is bound to get a bunch of garbage. How on earth will they analyze the open-ends and find meaningful, quantifiable data?

Researchers certainly can act like know-it-alls when it comes to designing surveys (even the ones who don’t really design surveys). I can sympathize, because I, too, am prone to critiquing other people’s surveys. Moreover, I agree that the new race and ethnicity questions are not ideal.

But wait. It turns out the researchers at the U.S. Census Bureau agree as well, so let’s give them the credit they deserve. They know tons more about how to ask these questions than any online critics in the market research industry. In fact, they spent over ten years and millions of dollars testing their questions. Right now the 2020 Census questions on race and ethnicity may well be the most thoroughly tested and validated survey questions ever on the planet.

The Census Bureau conducted the largest quantitative effort ever on how people identify their race and ethnicity.

Way back in 2010 the Bureau conducted an experiment to begin preparing for the 2020 Census. Their experiment was “the largest quantitative effort ever on how people identify their race and ethnicity.” It involved experimental questionnaires mailed to a sample of 488,604 households during the 2010 Census. Then they called over 60,000 of those households to re-interview them by telephone. In addition, they conducted 67 focus groups across the United States and in Puerto Rico with nearly 800 people. A detailed report of their methods and findings are available in a 151-page report.

Even before that effort, the Bureau conducted one-on-one cognitive interviewing to test for misunderstandings or misinterpretations of the wording. Here is what that involved:

The protocol for the cognitive interviews combined verbal think-aloud reports with retrospective probes and debriefing. Each cognitive interview involved two interviewers working together, face-to-face with the respondent. One interviewer read the RI questionnaire as if he or she was conducting the actual phone interview. Meanwhile, another interviewer observed the interview, took notes, and later asked cognitive interview and retrospective debriefing questions after the RI questionnaire was complete. The cognitive interview and probing questions aimed to explore respondents’ understanding of the race and Hispanic origin questions, their typical response to these questions, any variation that they might have in reporting race and origin, and their sense of burden of the interview. The debriefing probes were semi-scripted, allowing the interviewer to probe on things that occurred spontaneously while also covering a set of required material.

Then in 2015 the Bureau launched another round of research. They tested question format (one question vs. two), response categories (including Middle Eastern or North African, for example), instruction wording (using phrases like, “select all that apply,” for example), and question terminology (such as race, origin, ethnicity, etc.) to test alternative versions of the question, and to understand any potential problems with mode effects (mail questionnaire vs. online vs. in-person). They sampled and mailed to over 1 million households, and selected about 100,000 of those households for follow-up phone interviews. You can read about their methods and findings for this phase of research in a 380-page report.

If only we could all test our survey questions this thoroughly and so painstakingly!

It is true that the current ethnicity and race questions are not ideal (see the next section for why). But it is absolutely not true that the results will be “garbage.” At this point, Census Bureau researchers know a great deal about how people respond to the questions laid out on the census forms. And they know how to code and tabulate the responses to get a valid and reliable profile of our population.

The New vs. the Old

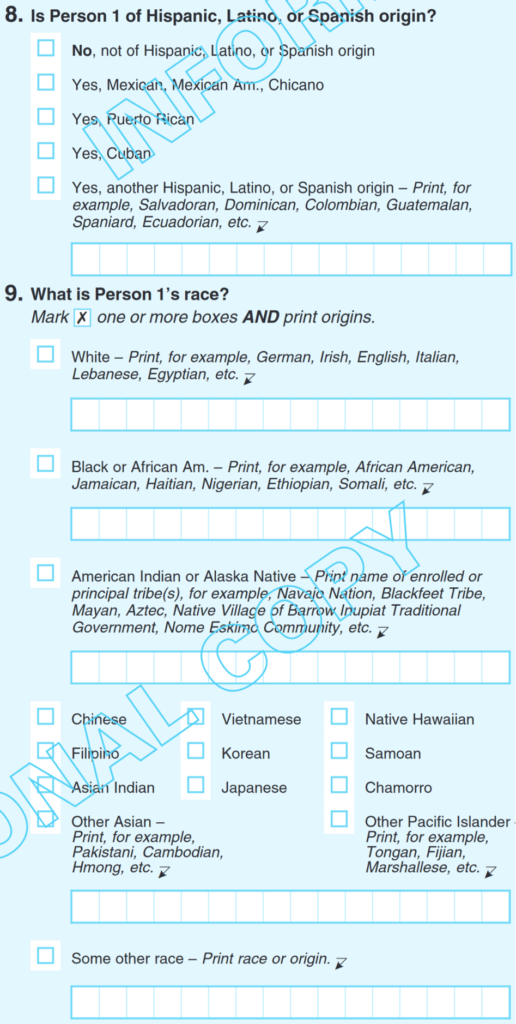

Wondering what all the fuss is about? Here are the new 2020 Census questions on ethnicity and race:

Compare this with 2010:

There is one reason neither version is ideal, and it has nothing to do with open-end boxes. It’s because Hispanic or Latino is asked separately from race, so when Hispanic/Latino respondents get to the question about race, many of them will select “other.”

Census researchers know this. They strongly recommended that Hispanic/Latino be integrated into the race question for the 2020 Census. But they ran up against a 1997 law requiring federal agencies to specify five minimum categories for data on race (American Indian or Alaska Native; Asian; Black or African American; Native Hawaiian or Other Pacific Islander; White) and two categories for data on ethnicity (Hispanic or Latino; Not Hispanic or Latino). The recommended changes to this law were ignored by the White House, and unfortunately Congress did not step in to fix it.

There are, however, some other interesting changes that did not require changes to the law. First, the word Negro has been dropped. Second, most respondents are now asked to specify their origins along with their race. The idea is to capture a more nuanced portrait of which countries, tribes, and regions of the world Americans of all races come from.

How to Ask Race on YOUR Survey

For several years we wrote surveys that replicated the Census’ two-question format asking for race and ethnicity, and we recommended that clients do the same. The reason is that usually you (we) must weight your data to Census benchmarks to ensure representative samples. Or, we need to compare your data to those benchmarks to gain a deeper understanding of your markets.

But clients are just like other Americans! Few of them think of “Hispanic or Latino” as being a category separate from race. So in our analysis and reporting we found ourselves nearly always recoding the data into a single race variable, and then applying that same recoding to Census numbers to make our comparisons.

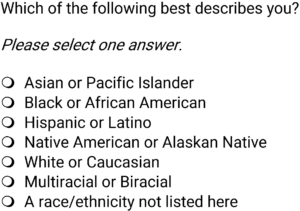

Over the last few years, then, we landed on this version of a race/ethnicity question for our surveys:

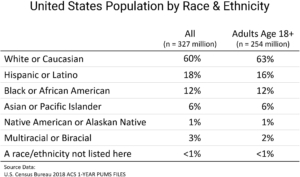

Note that the response options are in alphabetical order, except for the last two lines. We have found that this question works extremely well. Few people use the “other” category. It doesn’t offend or confuse people. And then with just a little bit of simple analysis and coding work, it easily maps onto current and future Census data:

This is how we now recommend most clients ask about race in their surveys, with some exceptions. If you need to track against your historical data, it may not work. If your customers are unique, varied immigrant populations (like one of our Chicago clients) you may want different details.

Whatever you do, think carefully about your approach — as carefully and thoughtfully as our colleagues in the Census Bureau have done. Although you cannot test as exhaustively as they have done, you don’t need to. The Census Bureau has provided an amazing foundation of knowledge and data you can feel confident relying on for your own work.

Stories from the Versta Blog

Here are several recent posts from the Versta Research Blog. Click on any headline to read more.

Why Your CEO Loves NPS: It Is Never Audited, and It Never Declines

The Wall Street Journal recently looked at the number of public companies using NPS as a measure of corporate performance in earnings calls and SEC filings.

Why You Should Be Using Conjoint Analysis

Conjoint lets you probe the depths of why customers prefer one product over another, and it lets you build a model to evaluate multiple product options.

Avoid Numeric Response Scales in Surveys — They Seem Scientific, but They Are Actually Ambiguous and Difficult to Report

If you can avoid using numeric rating scales in your surveys, you should. Why? Because they are often impossible to report in clear and meaningful ways.

Forget the Math — For Good Sampling You Need Equality, Inclusion, and Representation

For most research, pure random sampling (probability-based) is not achievable. But there are other ways to approach sampling. Here is a guide of what you will need.

AI (Artificial Intelligence) Just Rescued Our Survey — We Overlooked a Huge Mistake and a Robot Flagged It for Us

So far, robots and AI have done more harm than good in the world of market research and public opinion polling. But we just experienced a glimmer of AI hope!

Stockpiling and Job Worries: New Data on COVID-19 Highlights Crucial Role of Research

A national survey taken in the first week of widespread disruption from COVID-19 provides crucial data that will help agencies prepare for the crisis.

How to Trap Survey Trolls: Ask Them for a Story

At Versta Research we are now using our superpower of Turning Data Into Stories to help fight trolls and robots by asking just one clever open-end.

Five Best Practices for Keeping Your Data “Anonymous”

Now that sophisticated new algorithms can identify specific individuals in “anonymous” datasets, you need new best practices for how to manage your data.

If Truth Matters, Prove It with a Survey

A recent NYT article about the presidential election provides a perfect example of why journalists need surveys to substantiate the claims of their stories.

Here Is a Video Report That Engages Its Audience with Animation and Insight

One of our clients created a video report from our employee healthcare tracking study as their primary presentation of findings to stakeholders.

Versta Research in the News

Alzheimer’s Association Releases Findings on Physician Readiness

Versta Research was commissioned to conduct surveys of physicians and first-year residents about their training, readiness, and expectations for future dementia care. The results were reported by U.S. News & World Report, among other news outlets. Full details are available in the Association’s press release and in the 2020 Alzheimer’s Disease Facts and Figures report. Results are summarized in an infographic and video clip, as well.

Quirk’s Features Versta Research Survey for Chicago Bank

An article about Versta Research’s customer satisfaction survey for Devon Bank in Chicago appears in Quirk’s March/April 2020 print and online edition. The survey had a 25% response rate, far exceeding most online surveys, and was originally featured in our newsletter article, How to Beat Online Surveys with Old-Fashioned Paper.

Versta Research Coaches Business Students on Turning Data Into Stories

Joe Hopper, president of Versta Research, visited emerging new business leaders at the University of Illinois in Chicago for two weeks in February and March. Professor Jeffrey Parker wanted to showcase a better way to analyze and present market research findings.

New Research for Fidelity Investments on Money & Divorce

Fidelity Investments commissioned Versta Research for a new study on how people navigate the process of divorce financially and emotionally. Findings have so far been featured in stories by Reuters, Prevention, and Financial Advisor Magazine. Full details are available from Fidelity’s press release and Divorce and Money Study Fact Sheet.

MORE VERSTA NEWSLETTERS