April 2017

Dear Reader,

Like the cobbler whose children have no shoes, Versta Research has spent a lot of time helping others with research, and zero time on our own. When it comes to customer satisfaction research, there is a good reason for that. Most CX surveys are not designed to help customers.

Next month, however, we launch a plan to survey our customers after each engagement. Our survey is different from most. In this edition of the Versta Research newsletter, Build a Better Customer Satisfaction Survey, we tell you why and how.

Other items of interest in this newsletter include:

- Try Using Tables Instead of Charts

- A New KPI for Jargon-Free Research

- Please Do Not Gamify Your Surveys

- Statistically Significant Sample Sizes

- Data Geniuses Who Predict the Past

- Dump the Hot Trends for These 7 Workshops

- Use This Clever Question to Validate Your Data

- When People Take Surveys on Smartphones

- The One Poll That Got It Wrong

- Manipulative CX Surveys

- How Many Questions in a 10-Minute Survey?

- 10 Rules to Make Your Research Reproducible

We are also delighted to share with you:… which highlights some of our recently published work about car shopping, financial advising, and how college professors save for retirement.

As always, feel free to reach out with an inquiry or with questions you may have. We would be pleased to consult with you on your next research effort.

Happy spring,

The Versta Team

Build a Better Customer Satisfaction Survey

If you run any kind of customer satisfaction survey for your company, chances are it focuses mostly on your company and not on your customers. That is the reason why I, as a customer, almost never participate.

More about that in a moment … but first I’ll tell you about the one customer satisfaction survey that is different. It is from Skype for Business. It pops up several times a month after I complete a phone call through Skype.

Here is why I usually take that survey:

1. It matters. The survey asks me to rate the call quality, one star to five stars, and to identify specific problems with the call quality. This is the second VOIP phone system my company has migrated to. With any of these systems, call quality can be inconsistent and difficult to fine-tune. But with the right tweaks, quality can be perfect.

So I fill out the survey. I want Microsoft to know. “Thanks for asking—it’s not quite right yet.” Or, “Yes, that’s perfect. Hold it right there.” I have convinced myself (and I hope) that my star ratings are feeding back into a system that continually adjusts to make any choppy, electronic-sounding calls become crystal clear. (So far it is working!)

2. It is short. The survey takes ten seconds to complete. It asks for a rating, and then it asks me to specify how quality was degraded. In fact, when the survey first started, the list of multi-check quality issues had just four items. Easy! And I was happy to comply.

Alas, they got greedy. The list has expanded to include eight items, each with more detail and nuance. Now I sometimes ignore the list entirely. Why? I’ve just gotten off the phone. I need to take notes or take action from the call. I don’t have time to give Microsoft a detailed accounting of how it all went.

Which leads to the topic of why I almost never participate in other customer satisfaction surveys. Most of them are greedy and self-centered. They are exactly the opposite of the Skype for Business survey I just described. How so?

Most customer satisfaction surveys are greedy and self-centered.

They do not matter in a way that affects me in an immediate, tangible way. I have filled out surveys, pointed out errors, asked for help—you name it. I have taken the initiative and asked vendor teams for debriefing phone calls to review and incorporate my feedback. Forget it. They do not really want to align better with my expectations and needs. All they want is their scores. Indeed, if a direct conversation with a vendor team gets no action, why would a survey score, aggregated along with other customer survey scores, matter at all?

Unfortunately ratings, input, and comments on most customer satisfaction surveys are not used to correct immediate problems. As a customer, the best I can hope is that somewhere in the organization a person vetting the “insights” will help shift the culture and operations in a way that serves me in the long run. But I do not hold out much hope, because in reality, most of these surveys are designed to help them, not me.

They are self-centered. That’s the second reason I do not participate. No matter what they say, most sponsors of customer satisfaction surveys are not interested in me; they are interested in themselves. (“Enough about me. What do YOU think of me?”) They want data to calculate their “metrics” and their KPIs and their NPS scores. They want their bonuses, and they can’t have bonuses without measurable outcomes, so let’s ask our customers … “How did we do?!”

OK, I’m being harsh. Satisfaction surveys can provide important mechanisms for customer feedback when organizations are listening and committed to change. But think about the standard NPS question for a moment. How does a question like this—How likely are you to recommend Versta Research to a colleague?—help you, the customer? It doesn’t. It serves my purposes, not yours.

They are too long. I am willing to give more than ten seconds. I am even willing to give more than a few minutes if it will benefit me. But most of these surveys ask for too much. Multiple dimensions of service and product, drill-downs on every sales touchpoint, every service encounter, every member of the team … on and on. It’s asking a lot of your customers to think through and provide this data for free (or for a miniscule chance to win prizes) and it is no wonder that response rates hover around two percent.

Why do surveys cover all these dimensions and drill-downs? To build “key driver” models. That’s cool, but think about that for a moment, as well. If I tell someone exactly why I am satisfied or not, no driver analysis is needed to help me. Aggregate modeling is all about them, not about me. Long surveys are of zero immediate value to me as a customer.

Building Our Own Customer Satisfaction Survey

So in building our own customer satisfaction survey, we took inspiration from Skype for Business and we tried to eschew the annoying self-centeredness that afflicts most customer satisfaction surveys.

Our strategy: Design a survey that focuses on the research and what it achieved or failed to achieve.

Our strategy: Design a survey that focuses on the research and what it achieved or failed to achieve. Avoid asking questions about ourselves or about how well we performed. Dump NPS, because a customer recommendation has no impact on how we would improve the work for you. Make sure all questions focus squarely on the outcomes of the research, and that they help point us towards improved outcomes for the future.

Versta’s Customer Satisfaction Survey Questions

There are six questions we use. Here they are, with our reasoning for each:

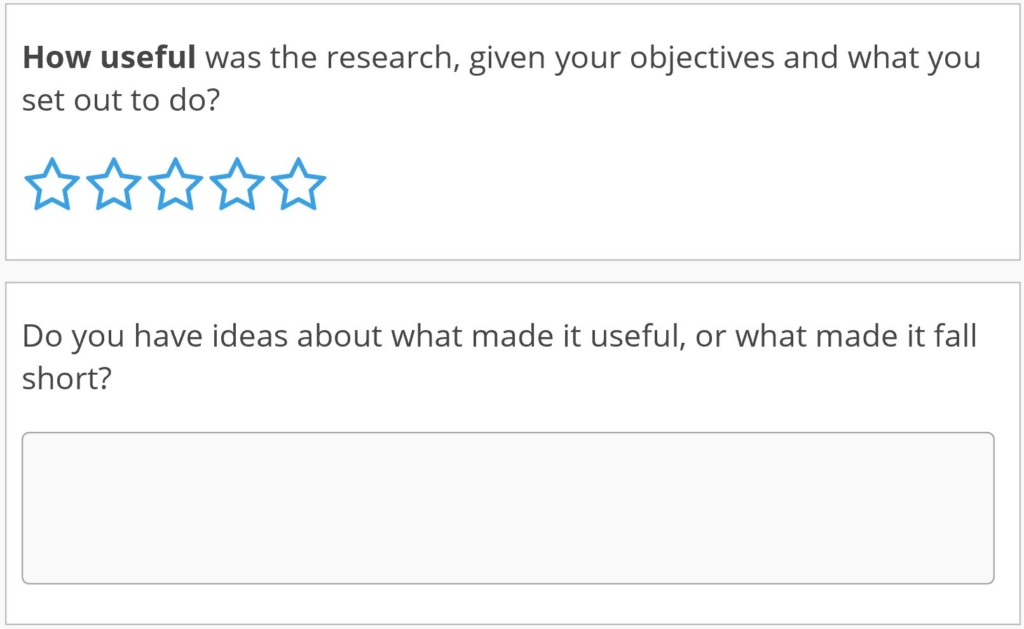

Why we ask this: If research doesn’t get used, then it was probably not worth doing. Even “actionable insights” may sit on shelves. So regardless of how satisfied you may be, we want to know if the research was used or not. And even if the answer reflects internal organizational hurdles rather than our poor performance—we need to think hard about a different approach the next time around.

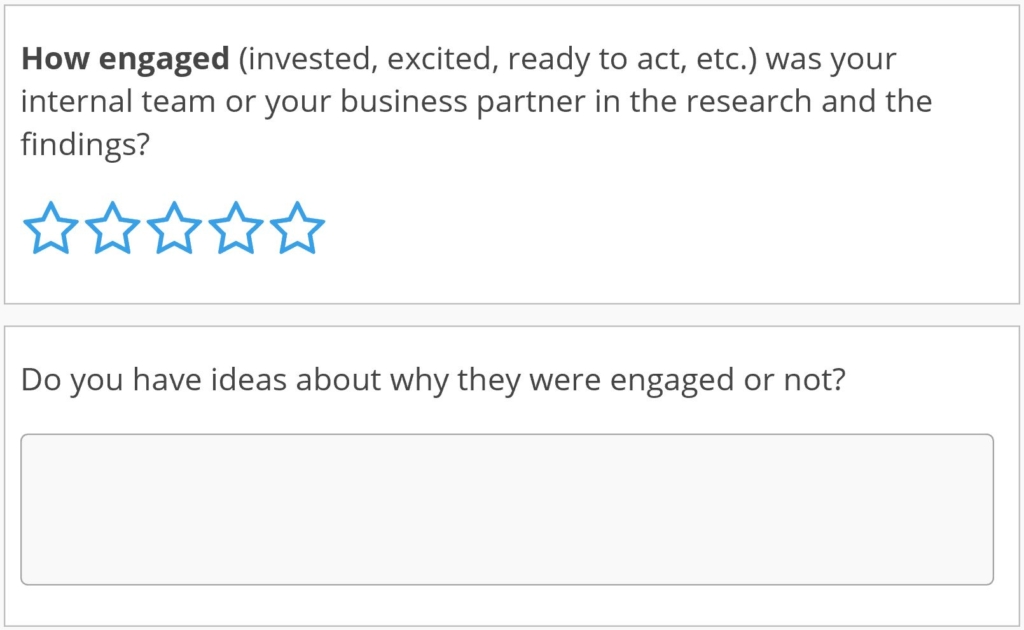

Why we ask this: Research is unlikely to be used if end-user clients are not fully invested and engaged. So we want know: Were your internal clients engaged, invested, and excited? How did that happen, or why did it not? This provides essential clues that can help modify our approach to design and reporting in our next effort with you.

Why we ask this: Research is unlikely to be used if end-user clients are not fully invested and engaged. So we want know: Were your internal clients engaged, invested, and excited? How did that happen, or why did it not? This provides essential clues that can help modify our approach to design and reporting in our next effort with you.

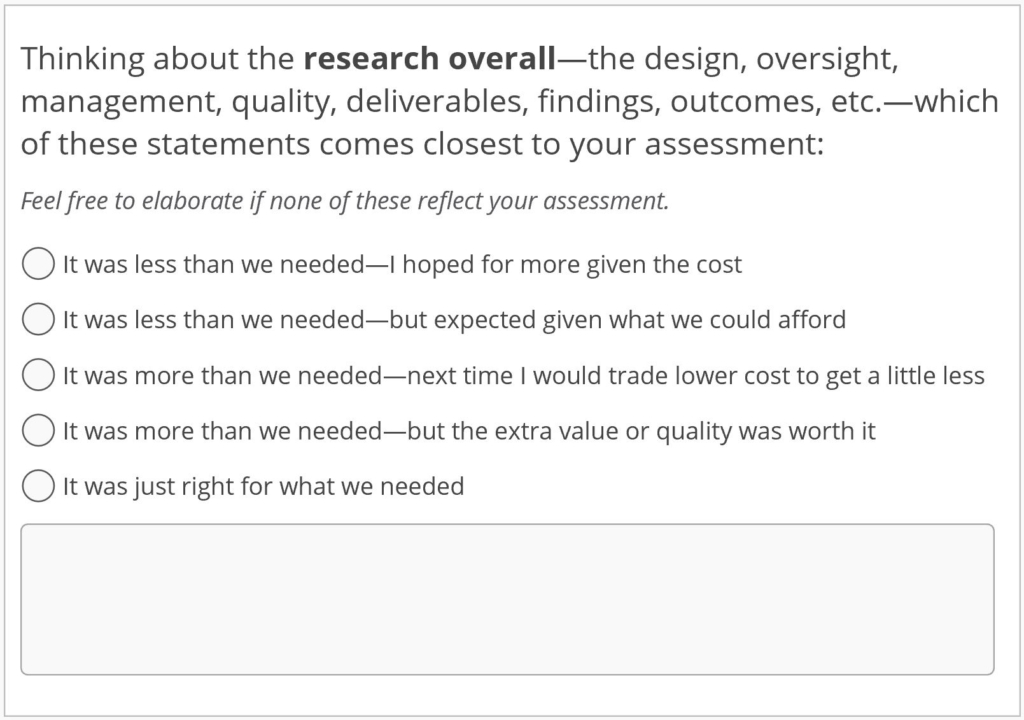

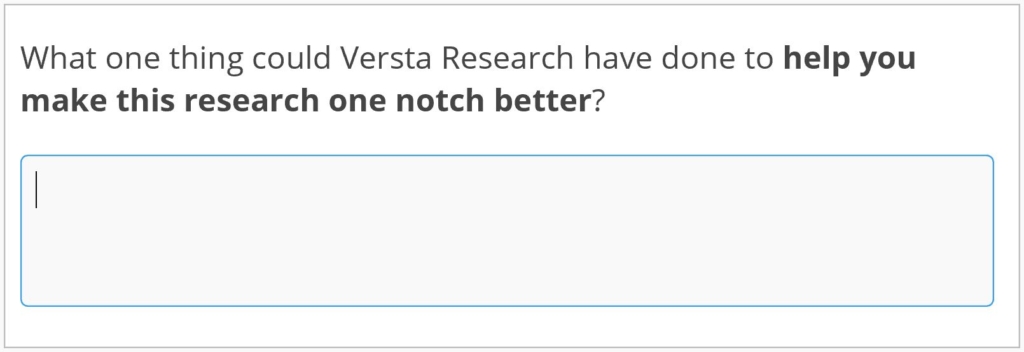

Why we ask this: One of the hardest things about research with multiple components and competing demands is calibrating each piece to meet your expectations and needs given how much you’ve spent. Our goal is to listen, calibrate and adjust. This question is like the Skype for Business question described above. Is the calibration “not quite right yet” or is it “perfect … hold it right there”?

Why we ask this: One of the hardest things about research with multiple components and competing demands is calibrating each piece to meet your expectations and needs given how much you’ve spent. Our goal is to listen, calibrate and adjust. This question is like the Skype for Business question described above. Is the calibration “not quite right yet” or is it “perfect … hold it right there”?

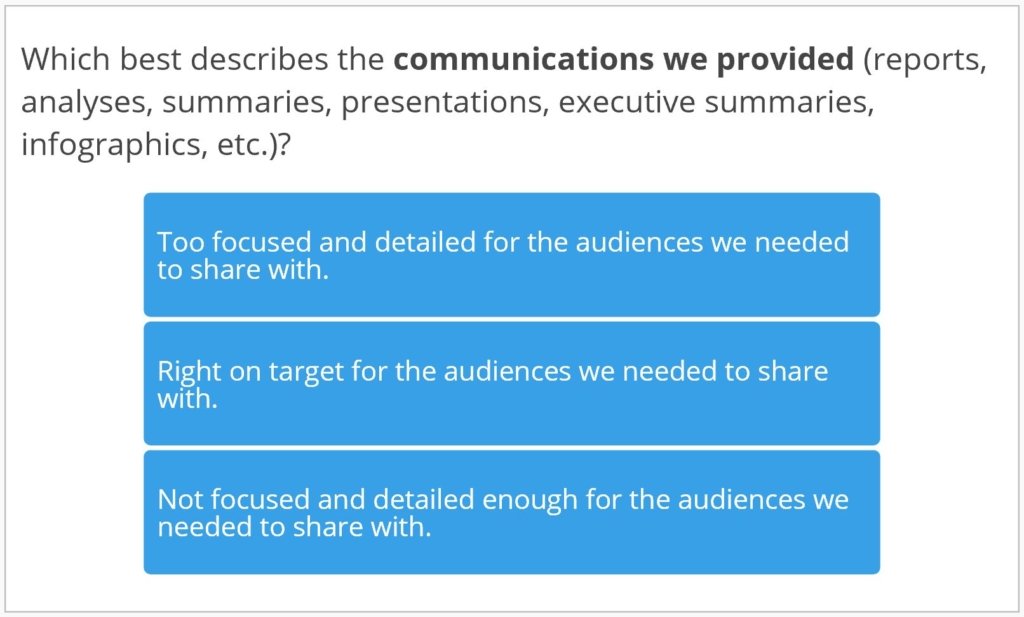

Why we ask this: Research reports are but one piece of a larger effort, but they can make or break the level of engagement, understanding, and excitement within your internal team. As outsiders, we rely on you to tell us what your audiences need. More forest? More trees? Please tell us how the reports we generate should be adjusted to strike just the right chord for you to succeed.

Why we ask this: Research reports are but one piece of a larger effort, but they can make or break the level of engagement, understanding, and excitement within your internal team. As outsiders, we rely on you to tell us what your audiences need. More forest? More trees? Please tell us how the reports we generate should be adjusted to strike just the right chord for you to succeed.

Why we ask this: There are always surprises we never know about … until we ask. Often they reflect a unique circumstance or organizational need, and we’ll need to incorporate that understanding next time around. Other times they reflect client preferences we assumed would never matter. Either way, tell us what matters, and it will become SOP for your next project.

Why we ask this: There are always surprises we never know about … until we ask. Often they reflect a unique circumstance or organizational need, and we’ll need to incorporate that understanding next time around. Other times they reflect client preferences we assumed would never matter. Either way, tell us what matters, and it will become SOP for your next project.

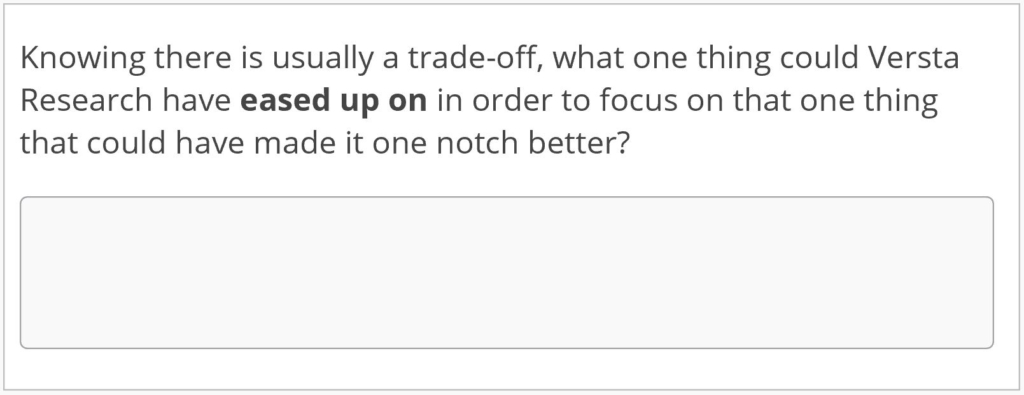

Why we ask this: Sometimes we overdeliver on things that are important to us, but which have little practical use for our clients. For example, our daily fieldwork updates always impress. But do clients really care beyond knowing we track everything carefully? Some do, some don’t. Identifying what matters less helps shift resources and energies to areas that make a bigger difference for what you need.

Why we ask this: Sometimes we overdeliver on things that are important to us, but which have little practical use for our clients. For example, our daily fieldwork updates always impress. But do clients really care beyond knowing we track everything carefully? Some do, some don’t. Identifying what matters less helps shift resources and energies to areas that make a bigger difference for what you need.

Actually, there is a seventh question that we added at the last minute. I will leave you in suspense about that one (you will see it if you test drive the survey). We’ll discuss the seventh question in an upcoming article, and you can watch for it via the Versta Research RSS blog feed.

I hope this convinced you that after your next engagement with us, Versta Research’s CX survey will be worth taking. The goal of the survey is not to get high scores or boost bonuses or trumpet NPS scores on our website. The goal is to understand when, where, and how the research itself got used and made a difference, and if it didn’t why not.

Please feel welcome to take a test drive of the survey, as well. It will give you a peek into the overall aesthetics and functionality of how we build all manner of surveys for our clients. And of course, if you have ideas for making it even more laser-focused on research outcomes that matter to you, please tell us, until we get it just right. “Yes, that’s perfect. Hold it right there.”

Stories from the Versta Blog

Here are several recent posts from the Versta Research Blog. Click on any headline to read more.

Try Using Tables Instead of Charts

Market researchers over-use charts. Often a table will work better as long as you follow a few best practices for layout and design.

A New KPI for Jargon-Free Research

When Dilbert starts making fun of the most common words in market research—actionable insights—it’s time to consider letting them go.

Please Do Not Gamify Your Surveys

Hey, wanna slash your response rates by 20%? Try rewording your survey questions to sound like fun and exciting games! Your respondents will hate it.

Statistically Significant Sample Sizes

Referring to a sample (or a sample size) as “statistically significant” is nonsense. But there are guidelines that can help you choose the right size sample.

Data Geniuses Who Predict the Past

A problem with predictive analytics is that the “predictions” are backward-looking correlations that are likely to be random noise, not brilliant insights.

Dump the Hot Trends for These 7 Workshops

Market research conferences have lots of flash, but a lot less substance. These upcoming workshops from AAPOR are an exception, and offer a great way to learn.

Use This Clever Question to Validate Your Data

It may have been a dumb oversight, but a survey from Amtrak stumbled upon an ingenious little question you can use to validate that your survey respondents are real.

When People Take Surveys on Smartphones

For most consumer surveys it is critical to offer mobile accessibility. But people on smartphones answer surveys differently. Here is what you need to know.

The One Poll That Got It Wrong

The LA Times poll consistently had Trump winning the popular vote. It missed by a long shot. The problem? Zip code sampling resulted in too many rural voters.

Manipulative CX Surveys

Here is a simple trick to get customers coming back to you, and spending more, after they take a satisfaction survey. And here’s why such tricks no longer matter.

How Many Questions in a 10-Minute Survey?

The U.S. Department of Transportation cites Versta Research as an authoritative source on how to calculate survey length. Here’s the scoop, and here’s our method.

10 Rules to Make Your Research Reproducible

Reproducible research is an emerging and important trend in research and analytics. If your research isn’t yet there, here are ten simple rules to get you started.

Versta Research in the News

Versta Research Helps Cars.com with WOM Research

New research about the power of online reviews has been published by Cars.com. Findings highlight how car shoppers use reviews, which types and sources of reviews they trust, and how they feel about negative reviews.

The Mobile Influence on Car Shopping

Cars.com partnered with Versta Research for its latest research on how mobile devices are shaping the modern car shopper’s journey, including which devices they use and how they use them.

College Profs Get a “B” for Financial Literacy

Fidelity Investments released its 2017 Higher Education Faculty Study conducted by Versta Research, which highlights how faculty and higher education employees are thinking about their readiness for retirement. Findings are highlighted in a retirement readiness infographic available from Fidelity.

Wells Fargo Advisors Helps Customers with Envision® Planning Tool

Versta Research recently helped Wells Fargo document how the Envision® process helps customers prioritize their financial goals and adapt to change.

Versta Research to Speak at Upcoming LIMRA Conference

We will be at annual LIMRA Marketing and Research Conference in Nashville with an invited presentation on secrets of deploying survey research for high-impact PR campaigns.

MORE VERSTA NEWSLETTERS