This DIY Tool Promises Statistically Significant Research

Here is a sly little test I recommend giving the next researcher who offers up their help on your next quantitative survey.

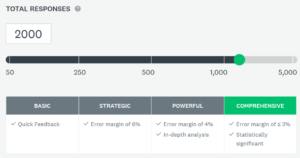

Ask if they have ever used a DIY survey tool, and what advice they would give you about sample size based on this selection tool you looked at from one of the largest DIY survey platforms on the market:

The first thing they should do is start scrunching their eyebrows and wondering about those “error margins” and maybe telling you they should be labeled as “margins of sampling error” or even better, “maximum margins of sampling error” because those numbers take into account only one type of error (sampling error) and because those numbers will actually vary for every question in the survey.

They should also tell you, maybe after consulting a calculator or Versta’s interactive graph for choosing sample size, that those error margins are not quite right (the maximum margin of sampling error at n=490, for example, is closer to 4%).

But I really hope before too long they will notice, and tell you, this: That check mark indicating sample sizes of 1000 to 5000 are “statistically significant” is nonsense.

Here is a useful passage from the Reference Manual on Scientific Evidence, compiled by the Federal Judicial Center and the National Research Council:

Many a sample has been praised for its statistical significance or blamed for its lack thereof. Technically, this makes little sense. Statistical significance is about the difference between observations and expectations. Significance therefore applies to statistics computed from the sample, but not to the sample itself, and certainly not to the size of the sample. … Samples can be representative or unrepresentative. They can be chosen well or badly. They can be large enough to give reliable results or too small to bother with. But samples cannot be “statistically significant.”

I hope the researcher offering help understands the concept of statistical significance enough to say: “You can calculate estimates, specify the precision of estimates, and conduct tests of statistical significance, on any sample size at all. This marketing gimmick you’ve shown me is nonsense.”

I sort of admire the marketing people working for this DIY behemoth for applying their tiered packaging model (good-better-best … “most popular” … “best value” …) to a survey tool, and trying to differentiate the tiers on meaningful criteria. But, really, marketing people also need smart and serious-minded researchers to tell them when their ideas are simply nonsense.

—Joe Hopper, Ph.D.