Six Things to Know about P-Values

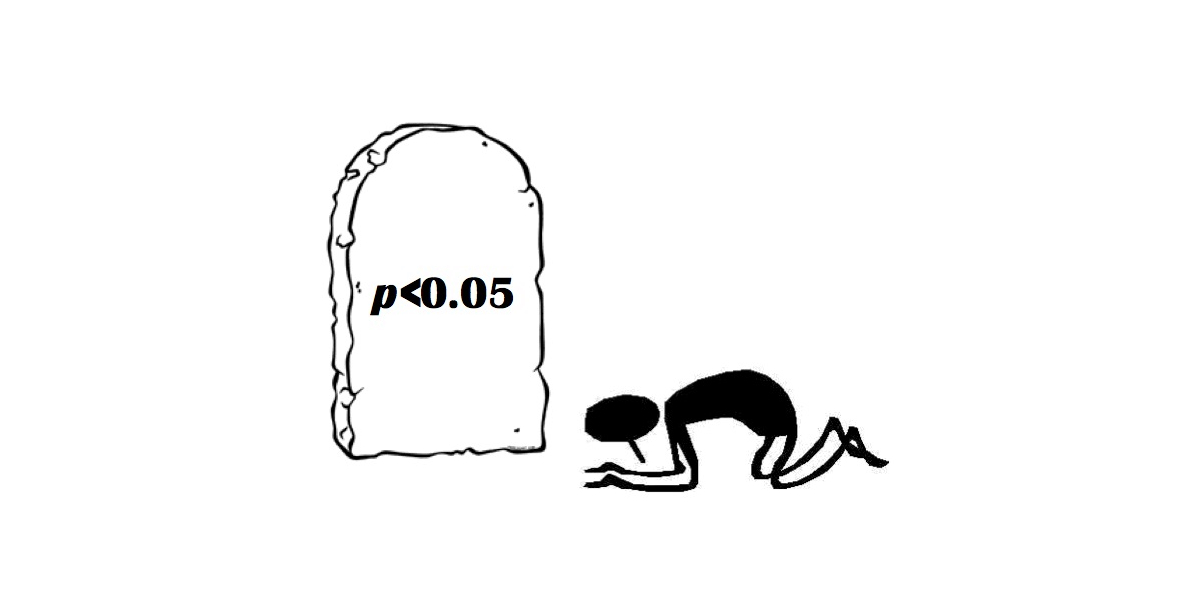

Whenever I write a research report, I feel strongly ambivalent about flagging data as “statistically significant.” If possible, I try to avoid it altogether. Why? Because p-values and concepts of statistical significance are often misunderstood, misused, and misleading. Indeed, the problem is so prevalent that one well-respected scientific journal in social psychology (Basic and Applied Social Psychology) has now banned “null hypothesis significance testing procedures” from articles that are submitted for review and publication.

Not surprisingly, this set off a firestorm of debate. Should social research (and by extension, market research) be using p-values and tests of significance? Last month, the American Statistical Association weighed in on the debate with an official statement about p-values. It lays out a clear definition of p-value and six guiding principles that ought to govern any decision to use p-values or not.

We agree with it wholeheartedly, and here we summarize the crux of the ASA’s statement:

A p-value is the probability under a specified statistical model that a statistical summary of the data (for example, the sample mean difference between two compared groups) would be equal to or more extreme than its observed value.

P-values should be used (or not) in accordance with the following six principles:

- P-values can indicate how incompatible the data are with a specified statistical model. But it is just one approach to measuring incompatibility, and it is only accurate to the extent that each of the underlying assumptions in calculating the value are true.

- P-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone. Rather, p-values measure the relationship between a hypothesis and the observed data, nothing more.

- Scientific conclusions and business or policy decisions should not be based only on whether a p-value passes a specific threshold. Context is critical, and that context includes the design of the research, the quality of the measurement, and any deviations from theoretical assumptions.

- Proper inference requires full reporting and transparency. As such, researchers should disclose the number of hypotheses explored during the study, all data collection decisions, all statistical analyses conducted, and all p-values computed.

- A p-value, or statistical significance, does not measure the size of an effect or the importance of a result. Statistical significance is not equivalent to scientific, human, or economic significance. Smaller p-values do not necessarily imply the presence of larger or more important effects, and larger p-values do not imply a lack of importance or even lack of effect.

- By itself, a p-value does not provide a good measure of evidence regarding a model or hypothesis.

Despite my ambivalence, I do rely on various tests of significance all the time in my work. Even if they violate key theoretical assumptions, they give me a feel for the amount of variation underlying the point estimates, all of which is incredibly useful as I analyze and interpret data. But then when I need to report all of that, I proceed with as much caution as our clients will allow. I urge you to do the same.