How Data Can Highlight Mistakes

We are often surprised by the number of senior researchers in the market research industry who never touch raw data. Often they don’t even have the tools, since “data processing” is outsourced to lower levels or other countries. It is surprising because we almost always engage in work where getting into the data and puzzling over anomalies or hypotheses yields much deeper insight.

Here is an example of how critical it can be to look closely at your data, and in this case, very early in the data collection process. We launched an online survey last week and got reports back from our sample supplier that incidence was just one-third of what we expected, which would have serious feasibility and cost implications.

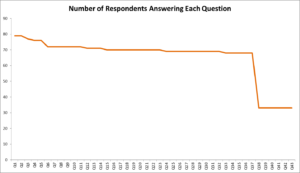

But once we looked at their report portal, we saw that for every qualified respondent completing the survey, two qualified respondents quit before finishing. That’s an unusually high ratio of “suspends” as we call them. So what was the problem? Were we just getting lousy respondents who did not want to seriously participate in a survey? Was the survey was too difficult, tedious, boring, or confusing? One source of answers (rarely examined) is to look at the data question by question to identify where in the survey people are quitting.

Nearly every respondent who quit got close to finishing and then dropped off at exactly the same point, which was odd because the most difficult questions were earlier in the survey. In fact, the question where most ended up quitting was an interesting drag-and-drop interactive exercise. Ah, that was the problem. The programming for the interactive piece was flawed and respondents were being kicked out.

It wasn’t without a good deal of angst that the programming team tested, re-tested, and confirmed the error. Everybody involved in this effort resisted: the sampling provider, the programmers, the survey tool developers, the questionnaire designers—they have all done this work hundreds of times, so there can’t be anything wrong with their piece of it, right?

Let the data speak. It will tell you where the mistakes are. There are lots of places and moments where things may go wrong. If the top people responsible for the project do not have immediate visibility into the data, they are unable to suggest smart solutions, and bad research will just keep happening.

—Joe Hopper, Ph.D.